QtSensorGestures Plugins¶

Explains how to develop recognizer plugins with QtSensorGestures

The QtSensorGestures recognizer plugins are the way to create your own sensor gestures.

Creating software to recognize motion gestures using sensors is a huge subject not covered here.

The

QSensorGestureAPI does not limit usage to any of the common classification methods of gesture recognition, such as Hidden Markov Models, Neural Networks, Dynamic Time Warping, or even the ad-hoc heuristic recognizers of Qt’s built-in sensor gesture recognizers. It’s basically a signaling system for lower level gesture recogition methods and algorithms to communicate to the higher level applications.

Overview¶

The steps for creating a sensor gesture plugin are as follows:

Sub-class from

QSensorGesturePluginInterfaceSub-class from

QSensorGestureRecognizerand implement gesture recognizer logic using QtSensors .Create an instance of that recognizer in the derived

QSensorGesturePluginInterfaceclass, and callregisterSensorGestureRecognizer(sRec); in your registerRecognizers() function.QSensorGestureManagerwill retain ownership of the recognizer object.This is the class in which the gesture recognizer system should be implemented from.

MySensorGestureRecognizer::MySensorGestureRecognizer(QObject *parent) : QSensorGestureRecognizer(parent) { } MySensorGestureRecognizer::~MySensorGestureRecognizer() { } bool MySensorGestureRecognizer::start() { Q_EMIT mySignal(); return true; } bool MySensorGestureRecognizer::stop() { return true; } bool MySensorGestureRecognizer::isActive() { return true; } void MySensorGestureRecognizer::create() { } QString MySensorGestureRecognizer::id() const { return QString("QtSensors.mygestures"); } MySensorGesturePlugin::MySensorGesturePlugin(){} MySensorGesturePlugin::~MySensorGesturePlugin(){} QList <QSensorGestureRecognizer *> MySensorGesturePlugin::createRecognizers() { QList <QSensorGestureRecognizer *> recognizers; MySensorGestureRecognizer *recognizer = new MySensorGestureRecognizer(this); recognizers.append(recognizer); return recognizers; } QStringList MySensorGesturePlugin::supportedIds() const { return QStringList() << "QtSensors.mygestures"; }

Recognizer Classes¶

If you are making sensorgestures available through the QtSensorGestures API, these are the classes to subclass.

PySide2.QtSensors.QSensorGesturePluginInterfaceThe QSensorGesturePluginInterface class is the pure virtual interface to sensor gesture plugins.

PySide2.QtSensors.QSensorGestureRecognizerThe QSensorGestureRecognizer class is the base class for a sensor gesture recognizer.

Recognizer Plugins¶

The Sensor Gesture Recognizers that come with Qt are made using an ad-hoc heuristic approach. The user cannot define their own gestures, and must learn how to perform and accommodate the pre-defined gestures herein.

A developer may use any method, including computationally- and training-intensive well- known classifiers, to produce gesture recognizers. There are currently no classes in Qt for gesture training, nor is it possible for the user to define his own sensor-based motion gestures.

A procedure for writing ad-hoc recognizers might include:

Test procedure to make sure it is easy to perform, and will not produce too many false positive recognitions, or collisions if used with other gestures. Meaning that gestures performed get recognized as another gesture instead.

Below you will find a list of included plugins and their signals.

For the ShakeGestures plugin:

Recognizer Id

Signals

QtSensors .shake

shake

For the QtSensorGestures plugin:

Recognizer Id

Signals

Description

Images

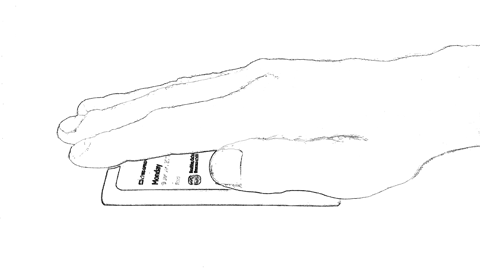

QtSensors .cover

cover

Hand covers up phone display for one second, when it’s face up, using the Proximity and Orientation sensors.

QtSensors .doubletap

doubletap

Double tap of finger on phone, using the DoubleTap sensor.

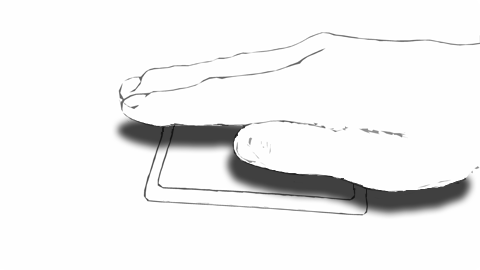

QtSensors .hover

hover

Hand hovers about 4 cm above the phone for more than 1 second, then is removed when face up, using the IR Proximity sensor.

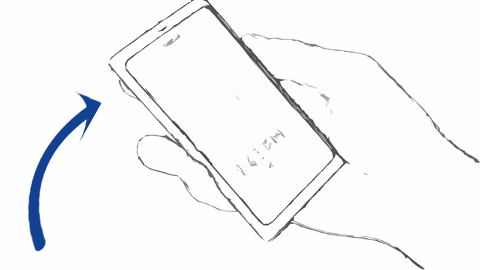

QtSensors .pickup

pickup

Phone is resting face up on a flat curface, and is then picked up and brought up into viewing position. Uses the Accelerometer sensor.

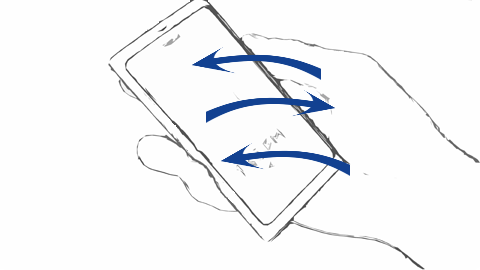

QtSensors .shake2

shakeLeft, shakeRight, shakeUp, shakeDown

Shake phone in a certain direction, using the Accelerometer sensor.

QtSensors .slam

slam

Phone is held in a top up position with a side facing forward for a moment. Swing it quickly with a downward motion like it is being used to point at something with the top corner. Uses the Accelerometer and Orientation sensors.

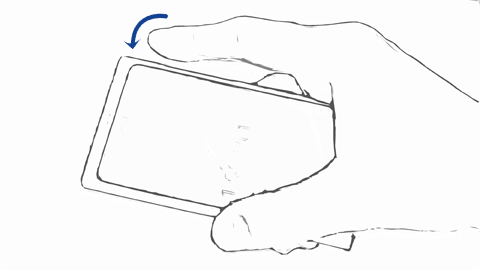

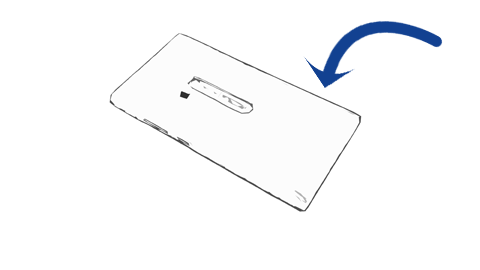

QtSensors .turnover

turnover

Phone is turned face down and placed on a surface, using Proximity and Orientation sensors.

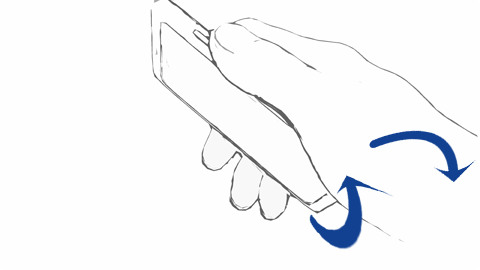

QtSensors .twist

twistLeft, twistRight

Phone is held face up and then twisted left or right (left side up or right side up) and back, using the Accelerometer and Orientation sensors.

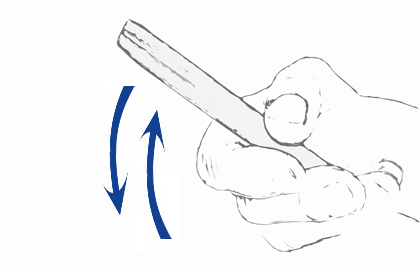

QtSensors .whip

whip

Move phone quickly down and then back up. Uses the Accelerometer and Orientation sensors.

© 2022 The Qt Company Ltd. Documentation contributions included herein are the copyrights of their respective owners. The documentation provided herein is licensed under the terms of the GNU Free Documentation License version 1.3 as published by the Free Software Foundation. Qt and respective logos are trademarks of The Qt Company Ltd. in Finland and/or other countries worldwide. All other trademarks are property of their respective owners.